Numerical Weather Prediction Resources

Special | A | B | C | D | E | F | G | H | I | J | K | L | M | N | O | P | Q | R | S | T | U | V | W | X | Y | Z | ALL

M |

|---|

N |

|---|

NWP Misconceptions | ||||

|---|---|---|---|---|

COMET Module: "Ten Common NWP Misconceptions" (2002-2003) Ten common misconceptions about the way NWP models work and hence how they can be interpreted, plus explanatory corrections of those misconceptions, plus quite a bit of more or less related material. Appropriate level: Advanced undergraduates and above. Summary comments: This module is heavily illustrated, has audio narration, and is very light on text. (A print version substitutes text for the audio narration; animation is lost but static color graphics remain.) A "Test Your Knowledge" section, with feedback, ends the presentation of each misconception. Each misconception is a kind of Trojan horse; the misconception is addressed directly, but it is also used as an excuse to present quite a bit of less narrowly (but still at least broadly) relevant material. The misconceptions vary in their degree of obscurity, but at least some of them look potentially useful for use in a basic NWP course that doesn't focus exclusively on theory. However, to appreciate the misconceptions and the corrections to them, students need already to know the basics about how numerical models are formulated and used, and in some cases more than that--some of the misconceptions are quite specific, as the titles below probably suggest. At least one of the misconceptions (I didn't examine all of them closely), "A 20 km Grid Accurately Depicts 40 km Features" (#3 below), makes important points about model resolution, but its examples cite values relevant to 2002-2003 era models and so are not as directly relevant today as they were then. Several "misconceptions" refer to the eta model, which few students henceforth will recognize. The general concepts remain highly relevant, however. Misconceptions addressed include:

| ||||

O |

|---|

| JH | Operational Model Matrix | |||

|---|---|---|---|---|

COMET Module: Operational Models Matrix Appropriate Level: Upper division and graduate level Overview An excellent resource to contrast the basic features of U.S. (and one Canadian) operational models. Links to relevant COMET modules and other on-line resources are provided. Information on many characteristics of the NMM-NAM remain to be added. | ||||

R |

|---|

Resolution Applet | ||||

|---|---|---|---|---|

A significant concept to numerical weather prediction is resolution. The notion is that at a higher resolution, more features can be represented by a model. However, this increased resolution comes at additional computational expense. Take, for example, a tropical cyclone. Below is an image of Hurricane Fran, at 1km resolution.

Note the structure that is visible: the details of the eye, for example. I am envisioning an applet that allows the user to choose the resolution via some sort of slider. The image will be 'radar' like image. This image, at 1km resolution, covering a 512km by 512km domain, will clearly show the structure of the eye-wall and of the outer rain bands. Using the slider, one can choose to degrade the resolution. Choices for resolution will be 1km, 2km, 4km, 8km, 16km, 32km, 64km, and 128km. Thus, at the coarses resolution, there are only 16 pixels, whereas at 1km resolution, there are 262144pixels (512**2) When the slider is moved from one resolution to another, the "radar" image changes to that resolution. I believe that images can be made using IDV, but just changing how many pixels are shown. One can choose to use them all, every 2nd, every 4th, etc. (Kelvin demo for LEAD). The changing image will show the consequences of resolution reduction: the eye wall structure will decay, and at somepoint, the eye will not be discernable. In addition to the image changing, there will be a text readout of the number of pixels. For example, for a resolution of 4km, nx=128, ny=128, and the total number of pixels is 16834. Beside this number of pixels, there will be a 'model computing time'. For example, we could assume that at 128km resolution, the model will take 1 minute to run. Assuming that a halving the resolution leads to 4x gridpoints, and making the time step 1/2 as long, a 64km run would then take 16 minutes. In the limit, the 1km resolution would take 16384 minutes, (over 11 hours). This model computing time would be expressed as a common clock. The number of time would be 'shaded'. For instance, if the model would take 1 hour to run, the clock would read 1pm, with the area of the clock from Noon to the hour-hand being shaded in. The two dramatic visuals would be the image being sharper or more degraded, and the clock. The idea is to incorporate, but improve upon, images such as this:

An important point is to make sure that the false idea (misconception!) that a 1km model will resolve a 1km feature is not communicated. Not sure how to do that... | ||||

S |

|---|

Spectral Wave Addition | |||

|---|---|---|---|

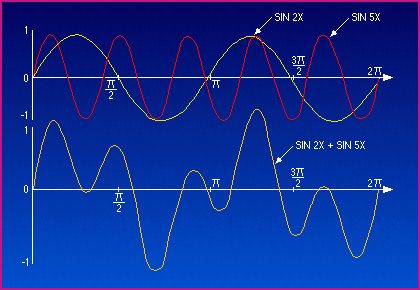

A concept that is difficult to get across is how a seeming random wave can be deconstructed into a series of sine waves. The image below is a little old, but gives a great visual of the process:

For the 'mark 2' version, I envision an applet that looks like this, but has, perhaps, 4 different lines. The top 2 lines would be sin(x) and cos(x) on the top line (much like the sin(2x) and sin(5x) on the top line of the above image), sin(2x) and cos(2x) on the second line. The third would be the sum of the four waves above, similar to the bottom line on the above image. Each of the top 4 waves would have an 'amplitude slider', where one could alter the amplitude of each of the waves. For instance, if one set the amplitude of 'sin(x)' to 1, and all the other waves (cos(x), sin(2x), cos(2x))to 0, one would get that same sine wave back on the bottom panel. By changing the amplitude of the 4 waves above, one can create increasingly complex patterns on the sum of the 4. I believe that the slider will need to be discretized, perhaps in increments of 0.25, to keep the number of possiblities low. To make this even more interactive, on the bottom (4th) line, would beith some sort of pre-determinied structure. The goal would then be for the user to manipulate the amplitudes of the top 4 waves (sin(x), sin(2x), cos(x), cos(2x)) until the sum of those 4 waves matched the 4th line. We could have a 'easy', 'moderate', and 'hard' option on this 4th line, with the easy being something like sin(x)+0.5*cos(x), and the 'hard' being a combination of all four above waves. The 'hard' one would try to match up with the observed longwave structure evidenced in the "Model Structure and Dynamics" module.

Thus, the 'hard' option of the three waves to try to match would be something resembling the wave in the above image. That might be hard to pull off with only 4 waves, but the motivation would be to show that a combination of something they can visualize (sin and cos) can represent the real, complicated, atmospheric flow field. | |||

T |

|---|

| SC | Ten Misconceptions about NWP | ||

|---|---|---|---|

Modules: Ten Common NWP Misconceptions Top Ten Misconceptions about NWP Models Appropriate Level: Advanced undergraduate and above. General Comments: These two modules are similar. Suggest to merge them together and keep it on METed website. | |||

| SC | Turbulent Processes | |||

|---|---|---|---|---|

COMET Module: Influence of Model Physics on NWP Forecasts This simple figure describes the planetary boundary layer processes in NWP models, which can be a supplement material this module. (Source: WRF User's Workshop) http://www.met.tamu.edu/class/metr452/models/2001/PBLproject.html | ||||

(Discussion of the complexity of representing radiative processes in a model, including the effects of clouds and clear-sky biases in the eta model--hope this gets updated! Brief summary of how models address

(Discussion of the complexity of representing radiative processes in a model, including the effects of clouds and clear-sky biases in the eta model--hope this gets updated! Brief summary of how models address